AI could spark a nuclear apocalypse by 2040, new study warns

06/03/2018 / By Lance D Johnson

A new study conducted by the RAND Corporation warns that advances in artificial intelligence could spark a nuclear apocalypse as soon as 2040. The researchers gathered information from experts in nuclear issues, government, AI research, AI policy, and national security. According to the paper, AI machines might not destroy the world autonomously, but artificial intelligence could encourage humans to take apocalyptic risks with military decisions.

Humans will inevitably trust in AI technology to a greater extent, as advances are made in AI for detection, tracking, and targeting. The newfound data intelligence that AI provides will escalate war time tensions and encourage bold, calculated decisions. As armies trust AI to translate data, they will be more apt to take drastic measures against one another. It will be like playing chess against a computer that can predict your future moves and make decisions accordingly.

Since 1945, the thought of mutually assured destruction through nuclear war has kept countries accountable to one another. With AI calculating risks more efficiently, armies will be able to attack with greater precision. Trusting in AI, humans may be able to advance their use of nuclear weapons as they predict and mitigate retaliatory forces. Opposing forces may see nuclear weapons as their only way out.

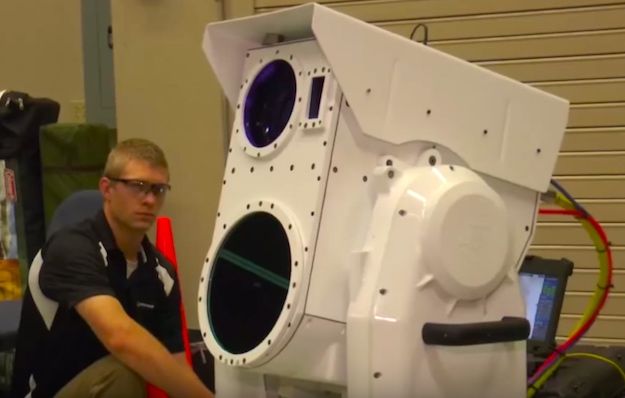

In the paper, researchers highlight the potential of AI to erode the condition of mutually assured destruction, therefore undermining strategic stability. Humans could take more calculated risks using nuclear weapons if they come to trust in the AI’s understanding of data. An improvement in sensor technology, for example, could help one side take out opposing submarines, as they gain bargaining leverage in an escalating conflict. AI will give armies the knowledge they need to take risky moves that give them the upper hand in battle.

How might a growing dependence on AI change human thinking?

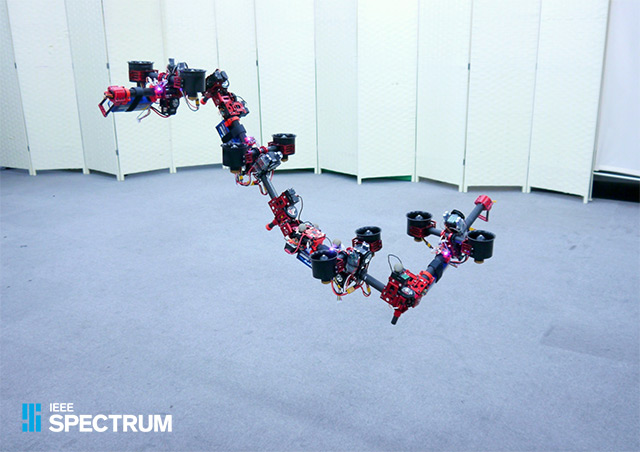

The first intended use for AI was military purposes. The Survivable Adaptive Planning Experiment of the 1980s looked to utilize AI for translating reconnaissance data for improving nuclear targeting plans. Today, the Department of Defense is reaching out to Google for integrating AI into military intelligence. At least a dozen Google employees have resigned, protesting Google’s partnership with the Department of Defense for integrating AI with military drones. Project Maven seeks to incorporate AI into drones to scan images, identify targets, and classify images of objects and people to “augment or automate Processing, Exploitation and Dissemination (PED) for unmanned aerial vehicles.”

Improved analytics could help militaries interpret their opposition’s actions, too. This could help humans understand the motives behind an adversary’s decision and could lead to more strategic retaliation as the AI predicts behavior. Then again, what if the computer intelligence miscalculates the data, pushing humans to make decisions not in anyone’s best interest?

“Some experts fear that an increased reliance on artificial intelligence can lead to new types of catastrophic mistakes,” said Andrew Lohn, co-author on the paper and associate engineer at RAND. “There may be pressure to use AI before it is technologically mature, or it may be susceptible to adversarial subversion. Therefore, maintaining strategic stability in coming decades may prove extremely difficult and all nuclear powers must participate in the cultivation of institutions to help limit nuclear risk.”

How will adversaries perceive the AI capabilities of a geopolitical threat? Will their fears and suspicions lead to conflict? How might adversaries use artificial intelligence against one another and will this escalate risk and casualties? An apocalypse might not be machines taking over the world by themselves; it could be human trust in the machine intelligence that lays waste to the world.

For more on the dangers of AI and nuclear war, visit Nuclear.News.

Sources include:

Tagged Under: AI capabilities, AI threat, apocolypse, calculated risk, computing, data translation, empire, future tech, future war, intelligence, military, military intelligence, military tech, nuclear power, nuclear war, nuclear weapons, rotobics, strategic stability, tech dependence, terminators